QUICKBITS

We’ve made an important step towards 24/7 availability

- With the Dynamic Scheduler we can now co-process completely independent tasks on the same Quantum Processing Unit (QPU) eliminating idle times.

- This improvement will enhance the overall user experience, making it much simpler for our customers to run tasks on our computers.

- Going forward we’ll be able to expand windows giving much longer access than we have typically been able to under our exclusive modes.

Katy Alexander

MARKETING DIRECTOR

Katy is the Marketing Director at OQC. Prior to joining OQC, she developed and scaled marketing and analytics functions for startups and large listed companies. Passionate about using data to guide strategic decisions, Katy’s unique blend of analytical rigour and creative expression enables her to tackle diverse challenges effectively. In her spare time, she champions diversity in STEM through the creation of games and education resources for primary schools.

CO-PROCESSING – ANOTHER STEP TOWARDS 24/7 AVAILABILITY

Introducing co-processing

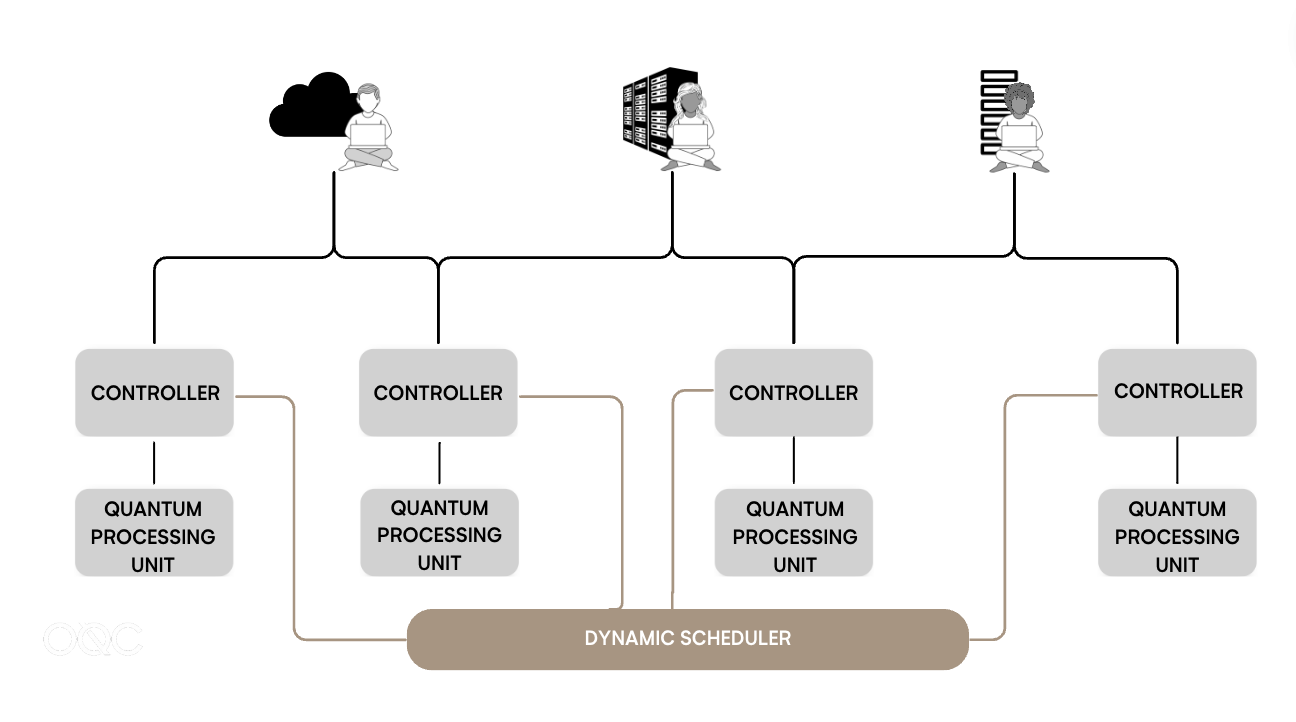

We are thrilled to unveil the Dynamic Scheduler, this means you no longer need to wait for an exclusive time window to submit your tasks. Until now, unique windows of time had to be exclusively scheduled for sovereign user groups. Even if the underlying quantum resources were not being utilised, they could not be released to other users. All users within those deployments had to abide by the same data management policies so exclusive windows were required for each group. With the Dynamic Scheduler we can now co-process completely independent tasks on the same Quantum Processing Unit (QPU) eliminating idle times.

Irrespective of how you access Lucy—be it through private or public cloud, data centre access, or direct access—you will no longer have to wait for an exclusive time window. This significant improvement will enhance the overall user experience, making it much simpler for our customers to run tasks on our computers. Going forward we’ll be able to expand windows for these co-processing customers easily giving much longer access than we have typically been able to under our exclusive modes.

How it works

Centralised Management

We have moved from a simple 1-1 mapping from our deployed task services to controllers to a much more flexible brokered arrangement for which a new scheduler serves as the central decision-maker.

Our preferred mode is to keep one scheduler in each global region to effectively manage the quantum computing estate in that zone. For any QPU, we can establish a scheduled window, its occupancy and the running mode (more on this later).

Additionally we have provisions in place for one-off windows that can override existing schedules if needed. This microservice has secure communication with our task services, and its own unique administration scheme separate from all other task deployments.

Brokering the Controllers – A dive into the scheduler-receiver communication

We’ve established a dedicated channel that serves as a seamless means of communication between the Scheduler and on-premise Controllers. These secure channels allow two way communication, facilitating realtime information to flow up from the Controllers to the Scheduler. This includes important health check statutes that allow us to monitor the overall system. Moreover, instructions can flow in the opposite direction, granting the Scheduler the ability to command each Controller individually.

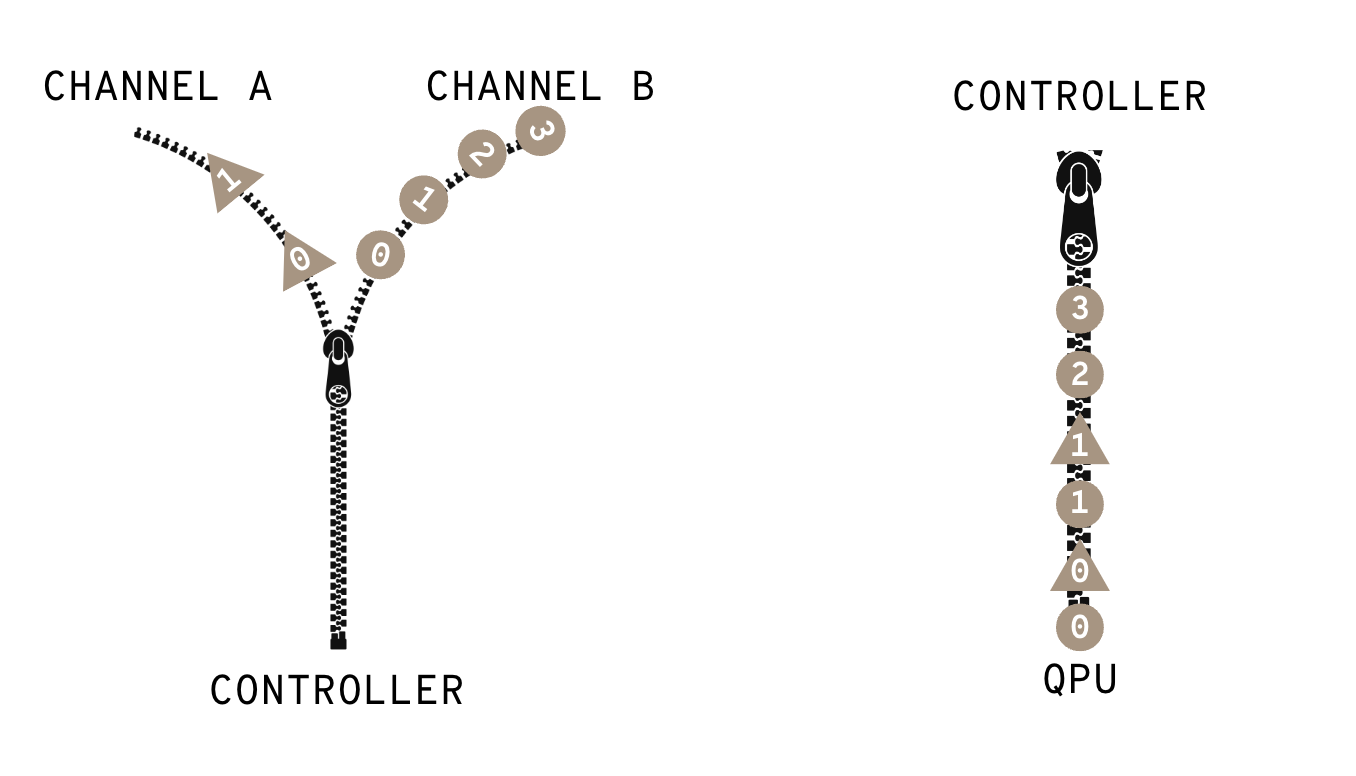

Dynamically defining run modes- Into the MessageSource

Run instructions provided by the Scheduler are processed by our Controllers. These instructions include the running operational mode, which channels they apply to, and what the relative priority is. By acting upon these instructions, the Controllers apply the designated scheme to the inbound and outbound channels.

The need to include realtime information known to the Controllers can also be specified in these instructions so that continued fine-grained updates from the Scheduler are not required. At any time, the Scheduler can override the running mode by sending fresh instructions. This enables us to provide a detailed specification for how our Controllers consume messages.

One simple application of this technology is a “Fair Sharing” scheme, allowing any tennant of an active window to gain equal priority on running the next task. While Fair Sharing is our preferred mode, we have an extensible scheme and any kind of sequencing across the target channels that we wish to apply. This might include allowing access for internal systems only while the QPU is unutilised, but otherwise prioritising customer traffic. It might also be used for applying short-lived focused processing on a single channel for hybrid workflow processing by reading task metadata.

“Our solution allows us to zip together any number of incoming channels close to the quantum processing unit (QPU), enabling real-time and static information to determine how to best route traffic onto our quantum hardware. Our objective is to have zero idle time on any of our global live QPUs.”

Owen Arnold Lead Sofware Engineer. OQC

Instructions from Schedulers are not limited to the running mode alone. We also have the capability to remotely disconnect our controllers from quantum hardware in the case of maintenance requirements. Additionally we can put the Controllers into background running modes, allowing them to continue processing task information while disconnected from the hardware, and we can re-load calibration information from disk without shutting down the Controllers. All of these measures are implemented to ensure that our backend services remain operational for as long as possible.

Bolstering Security – Internal encryption and message receipting

By following this co-processing model we can deploy data sovereign top level task services and databases which are completely isolated from one another and apply their own data management rules, including data retention times, TTLs, security settings and any other policies that can be built to match those of the parent organisation, or even integrate with them directly. In our previous setup, such customisation was not feasible, and our partners were required to adopt our rules without exception.

In addition to securing data at rest, we have capitalised on this opportunity to enhance the security of per-channel encryption to all of our channels including our instruction channel for data in transit. Our services will also book keep tasks immediately identifying and rejecting any traffic that didn’t originate from the authorised/original source. Although these security layers may appear redundant, we are committed to leveraging every possible measure to safeguard customer information.

Thanks to our innovative solution, we can now achieve longer running times and optimal resource utilisation compared to previous methods within any active window. We have eliminated idle times by co-processing completely independent tasks on the same QPU without any additional overhead. Monitoring and measuring the device in-situ (while live for customers) is now a possibility using any of our internal formats, or OpenQASM, OpenPulse or QIR.

With immediate effect we’ll be moving our internal R&D and monitoring into co-processing with joint tenancy with selected external customer groups.

“Our mission is to make quantum computing accessible to humanity, and to accomplish that, we need to provide enterprise ready solutions. With the rollout of this feature, we are one step closer to realising that future.”

Simon Phillips CTO OQC

We remain dedicated to pushing the boundaries of quantum computing and delivering the best possible experience to our customers.

Stay tuned for more updates on our exciting advancements in the field.

Join our newsletter for more articles like this

By clicking ‘sign up’ you’re confirming that you agree with our Terms & Conditions