QUICKBITS

Hybrid in your back garden

- The strategic placement of OQC Toshiko in colocation data centres is a carefully designed choice to remedy runtime challenges and provide better user experience.

- Our collaboration NVIDIA will provide users with the addition of new compute capabilities of the NVIDIA GH2000 Grace Hopper Superchip

- Our initial testing shows a consistent 30% improvement in speed on hybrid jobs and we’ve identified a 2x improvement on roundtrip times.

Jamie Friel

TECHNOLOGY MANAGER: QUANTUM THEORY

Jamie is responsible for building software solutions that will help build a quantum future. In particular building a bespoke quantum compiler that will allow groundbreaking problems to be solved on OQC’s hardware. Before joining OQC, Jamie worked as a software developer for a grid battery company, part of the UK’s national grid goal to bring greenhouse gas emissions to net zero by 2050.

Quantum computing offers a fundamentally different take on computation, one with extraordinary power and potential over its classical counterparts. Quantum computers will not fully replace classical computers, but sit alongside them for the foreseeable future. Setting up this symbiotic relationship means catering for both. We’ve taken a groundbreaking step by deploying our latest QPU, OQC Toshiko, directly into colocation data centres: positioning quantum compute capabilities at the heart of classical computing architecture. By bringing our digital infrastructure into commercial data centres alongside quantum hardware, we are discovering exciting collaborations, such as with NVIDIA. This collaboration has flourished, with our teams co-developing the NVIDIA CUDA Quantum platform to work with our quantum computers alongside a NVIDIA GH200 Grace Hopper Superchip and install their NVIDIA DGX systems GH200 Grace Hopper Superchip into commercial data centres alongside our quantum systems, to conduct hybrid quantum-classical experiments. We’re actively exploring how our software and hardware ecosystem, fully integrated into these environments, can cater to the requirements of quantum algorithms.

Addressing runtime challenges

One of the significant challenges in the hybrid-quantum space is the prolonged runtime, which results in extended waiting periods per iteration. Acknowledging this bottleneck, we are focused on minimising latency between quantum and classical computation. Our strategic placement of OQC Toshiko in commercial data centres is a carefully designed choice driven by key considerations including scalability, accessibility, and enhanced collaboration opportunities with industry leaders like NVIDIA. This decision enables OQC to comprehensively remedy runtime challenges to ultimately provide a better experience for enterprise users.

Build the infrastructure, not the engine first

The core of our strategy revolves around building the necessary infrastructure to support quantum computing by utilising existing environments: the world of high-performance compute is not geocentric around quantum computing and it shouldn’t be. Before quantum computing was a possibility, commercial data centres had solved many important infrastructure challenges we now wish to use. By integrating quantum compute, we are able to explore, test and develop quantum, because if education, connectivity, simulation and ecosystem development is only conducted at the point that fault tolerant hardware is ready, a huge opportunity would be lost.

You wouldn’t put an F1 engine in a classic mini coop – why do it with a quantum computer?

By leveraging the benefits of global commercial data centres, we can easily scale our software and hardware ecosystem to meet evolving computational needs while ensuring data sovereignty and security for our users. By encapsulating both the physical quantum computer and auxiliary services inside the colocation environment, customers never need to send data over the public cloud.

OQC Toshiko alongside high-performance computers in colocation data centres represents a significant milestone in quantum computing accessibility. All elements of the quantum computer are enclosed in a well controlled, single physical and digital environment. This puts the network in much closer control. We see much faster round-trip times and predictable network performance than our previous lab-based solutions. This has been measured across commercial data centres in the Brookfield campus, utilising dedicated 100Gbs optical links rather than the public internet. We have tested this model with our latest OQC Toshiko services in the commercial data centres. In the metro we reliably obtain a greater than 2x speedup over the public internet, with a reliable single hop. This also offers much better data security. We hope to encourage customers to experiment with real data rather than toy examples based on the security we can provide around our offering.

Not only is this better from a security perspective, but it also ensures much lower round-trip times via fewer hops and higher bandwidth on dedicated connections. This advancement opens doors for both R&D and industrial applications, making quantum computational resources more attainable and interconnected. This is a tangible stride towards making quantum computing more accessible and accelerating the pace at which industries can experiment with quantum computing to harness the potential quantum advantage in real-world applications.

The solution

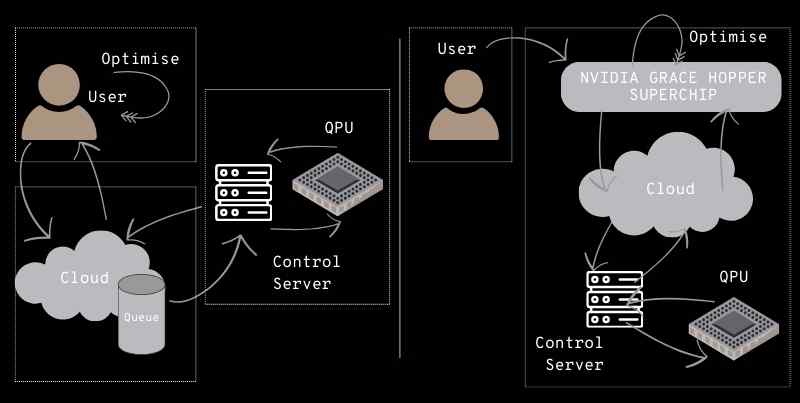

Left shows the previous flow of data for a single iteration of a hybrid problem. The user submits their quantum program to our cloud, which enters our first in, first out queue system before reaching the control server and ultimately the QPU. The key bottlenecks are the network boundaries between the user and the cloud, then the cloud and the control server. With the NVIDIA GH200 Grace Hopper Superchip (right-hand side of the image), the user submits a hybrid problem to the superchip, which submits to the cloud and the rest of the flow follows as before. The key difference being that once the user submits data to the NVIDIA GH200 Grace Hopper Superchip, all data transfers remain within the local network of the data centre.

Near-term quantum usage is adjacent to classical compute, not instead of it. The NVIDIA CUDA Quantum framework was built to address the need of hybrid applications. CUDA Quantum offers a single, high-performance-capable language which can be used to program quantum and classical sides of the solution. This framework offers flexible ways to create quantum ansatz — the building blocks of a hybrid quantum program — and comes with a range of out-of-the-box utilities, such as optimisers. It’s also relatively easy for quantum hardware providers like OQCs to contribute to and host our devices on. The ability to work either in C++ or Python, thanks to the available bindings, is very useful. This is why we like CUDA Quantum at OQC.

CUDA Quantum was released in June 2023 and has a long-standing development background. It’s built for high performance and scalability. NVIDIA has embraced open-source efforts from the QIR-Alliance community. OQC has teamed with NVIDIA, first to bring OQC Lucy to CUDA Quantum, and more recently to release OQC Toshiko onto the framework.

We now have three interesting elements: an on-prem co-located and upgradeable set of quantum computers, a software framework that gives algorithm developers power to tackle a range of problems, and now also a NVIDIA GH200 Grace Hopper Superchip on premises. We have ample power-cooling and networking capabilities for significant expansion. With our newest-generation quantum computer, hosting OQC Toshiko-grade QPUs, we will provide users with a seamless hybrid quantum-classical computing experience. By combining OQC’s 32-qubit platform with NVIDIA technologies, we are paving the way for secure and efficient quantum-classical runtime within commercial data centre environments. This is the kind of platform we need to engage industry and conduct further research into these nascent technologies.

The magic is the quantum computer in your backyard.

The collaboration will provide users with the addition of new compute capabilities of the NVIDIA GH2000 Grace Hopper Superchip, will supercharge hybrid algorithms without slowing down cloud-based execution. “The collaboration with NVIDIA offers our new and existing customers the option to use NVIDIA’s high-quality software framework, while leveraging the NVIDIA GH200 Grace Hopper Superchip, to explore quantum solutions in their applications with our quantum hardware,” said OQC CTO Simon Phillips. “It is a strategic step that will help quantum to overcome existing limitations, offering enhanced speed, accuracy, and scalability.”

The OQC QCaaS model

Over the last year, we have radically changed our QCaaS solution from a time-allocated resource booking to one that can resource share to achieve high throughput. Our previous approach was suitable for our lab-based environments, with fewer uses. It was not a good fit for our vision of secure, 24/7 on-demand access — which goes hand in hand with our colocation efforts. These quantum-enabled data centres have made our systems infrastructure more secure and resilient; what follows are systems that can be more available and offer a higher quality of service.

Our teams have utilised cross-functional expertise to design a QCaaS model that is accessible and seamless for enterprises of any size. There a few unique features of OQC’s QCaaS that businesses are already utilising:

Co-processing tasks

We allow fair sharing of the QPU (open-swim) across a single very large window. We securely interleave tasks from different organisations for execution on the hardware. This simple execution model can be expanded in ways that benefit all users, rather than putting time-based users into contention.

Parallel and offline compilation

More recently, we have bought in parallel and offline compilation: allowing tasks to be compiled down to the pulse level and stored until there is system availability for execution. This means you no longer need to wait for an exclusive time window to submit your tasks. This enables good system throughput, and is particularly useful for our larger systems like OQC Toshiko, where high-optimisation level in circuit transpilation and large numbers of qubits mean that compilation time is a significant part of the processing. The compilation process is highly scalable and can easily be load balanced. By avoiding serial compilation and execution steps for each task, we can maximise the availability of the QPU for execution.

A secure on-premise solution

We have also made our QCaaS system available completely on-premise for co-located users. This is what allows us to keep all our inbound and outbound traffic tied to the data centre’s private network. Finding scalable, robust software infrastructure solutions without reverting to legacy server deployment was one of the major challenges overcome. Since the same QCaaS solutions can now serve us in Public and Private Clouds, we can run smaller development efforts as we innovate further in this environment.

” We’ve brought quantum computers front and centre in the compute stack by seamlessly integrating them into existing infrastructure. I can’t wait to see what our users achieve with OQC Toshiko, fully integrated and an accessible part of their data centre computing power!”

Jamie Friel, Compiler Team Manager

Hybrid in your back garden

Our initial testing of these solutions shows a consistent 30% improvement in speed on hybrid jobs just through network improvement. We’ve also identified a 2x improvement on roundtrip times. Not only have initial results been promising but this solution is available to anyone with a server in one of our connected data centres. There are many other exciting further options for improving this, and all of this with your data remaining on prem!

Join our newsletter for more articles like this

By clicking ‘sign up’ you’re confirming that you agree with our Terms & Conditions