QUICKBITS

From Cray to Quantum – Looking back to look forward

- In supercomputing, stability and maturity were driven by the need for long-term compatibility, cost reduction, for both manufacturers and users, and accessibility.

- As supercomputing continues to develop, there is an innate need for a new generation of numerical algorithms that consider core processors, data movement, energy consumption and more to meet the demands of applications.

- As datasets continue to expand, the demand for computational power to process and analyse this data also increases.

- By harnessing the principles of quantum physics, quantum computers could help address energy consumption concerns and look to solve problems that have plagued society for years – accomplishing complex calculations 47 years quicker or 158 million times faster than any supercomputer.

Katy Alexander

MARKETING DIRECTOR

Katy is the Marketing Director at OQC. Prior to joining OQC, she developed and scaled marketing and analytics functions for startups and large listed companies. Passionate about using data to guide strategic decisions, Katy’s unique blend of analytical rigour and creative expression enables her to tackle diverse challenges effectively. In her spare time, she champions diversity in STEM through the creation of games and education resources for primary schools.

Predicting future trends in any industry is difficult, and it requires an understanding of both present, future and past evolutions. In computing, and more importantly supercomputing, stability and maturity were driven by the need for long-term compatibility, cost reduction, for both manufacturers and users, and accessibility. Supercomputers, though built on similar principles as general-purpose machines, prioritise performance above all else. They are exceptional in their ability to handle vast amounts of data and tackle complex problems that would overwhelm traditional systems. This is achieved through massive clusters of CPUs, each equipped with multiple processors, memory, and symmetric multiprocessing capabilities. This computational prowess has revolutionised various fields, from climate science to drug discovery, by enabling simulations, data analysis, and modelling at scale.

Racing towards innovation

The beginning of the supercomputing journey traces back to the days when it was characterised by expensive, specialised architectures developed by companies such as Cray Research, Control Data and IBM. These machines, optimised for floating-point operations, advanced scientific research, particularly during the Cold War era when the supercomputing gap was front and centre – and underscored the pivotal role of technological advancement and supply chains in shaping global power dynamics.

Seymour Cray, a legend in the field, was pivotal in shaping the trajectory of supercomputing with designs, including the CDC 6600 and the Cray-1. Recognising that mere processor speed alone wouldn’t suffice if data retrieval couldn’t keep pace, Cray advocated for a holistic approach emphasising swift data movement – the result was the CDC 6600.

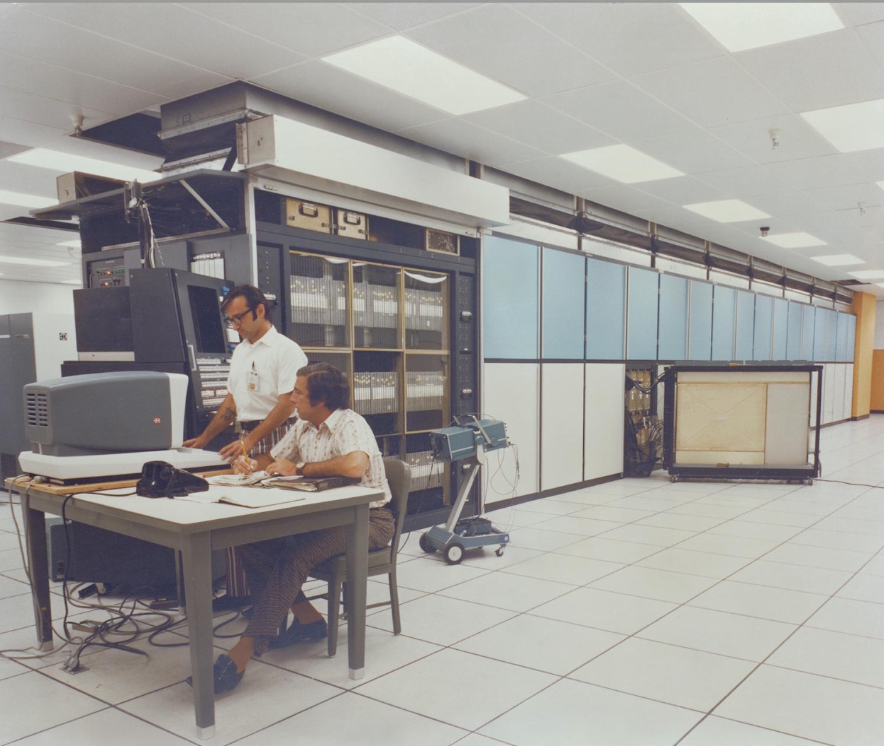

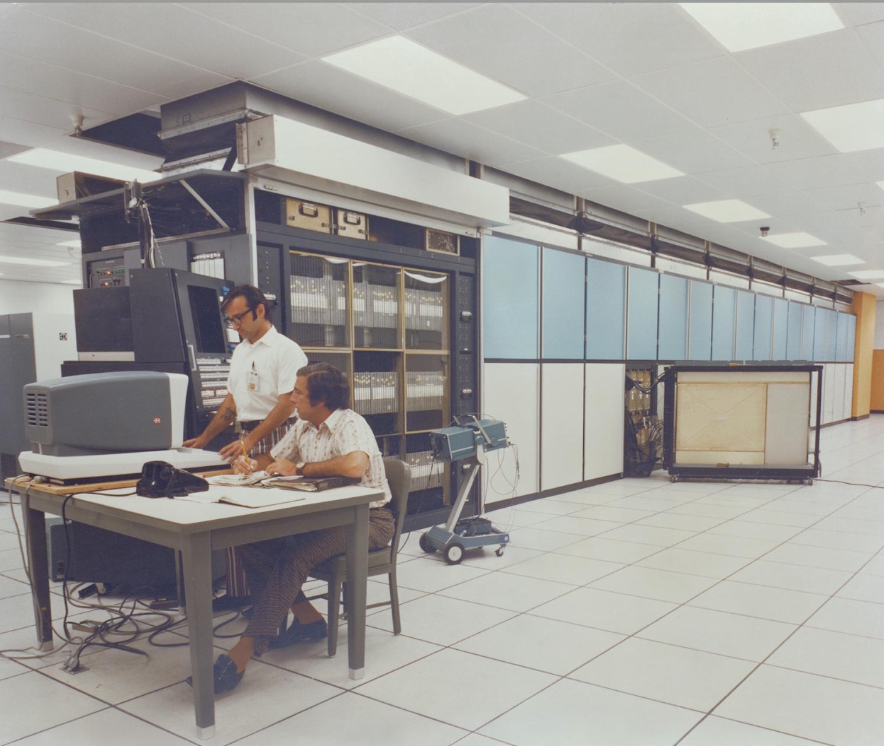

The CDC 6600 was a mainframe computer from Control Data Corporation

Introduced in 1964, the CDC 6600 defined the supercomputing market at the time, setting benchmarks for performance, cooling and scalability. A leaked IBM memo underscores just how impactful this device was:

Last week CDC had a press conference during which they officially announced their 6600 system. I understand that in the laboratory developing this system there are only 34 people, “including the janitor.” Of these, 14 are engineers and 4 are programmers, and only one has a Ph. D., a relatively junior programmer (…) I fail to understand why we have lost our industry leadership position by letting someone else offer the world’s most powerful computer.

IBM President Thomas Watson, Jr.’s

Co-processing was the next big step. Released two decades after its predecessor, the Cray-1, the Cray-XMP, harnessed the power of co-processing and parallel processing. While these terms are commonplace today, their introduction in the 1970s and 1980s was novel. The Cray-XMP essentially combined two Cray-1 computers, capitalising on multiprocessing to triple the performance achievable by a single unit. This collaborative approach laid the groundwork for achieving unprecedented computing speeds, eventually surpassing the monumental milestone of 1 billion floating-point operations per second (1 GFLOPS). These processors worked in concert to achieve enhanced performance.

ILLIAC IV – credit NASA. In the 1970s, the ILLIAC IV was another example of large-scale parallel computing.

Danny Hillis, an MIT grad, realised that distributed computing was the way of the future. The CM1 had over 65k small CPU cores interconnected using a 27-dimensional hypercube. Massively parallel computing was born. This required the software and operating systems to direct traffic. It wasn’t a simple challenge. The advent of massively parallel computing led to the design of parallel hardware and software, as well as high performance computing.

The escalating costs associated with fabricating faster processors prompted a change in the market dynamics, as supercomputer vendors embraced commodity hardware, also referred to as off-the-shelf hardware, components to drive down costs and broaden access. This shift not only fostered competition, and created new supply chains more importantly it democratised access to HPC systems.

As supercomputing continues to develop, there is an innate need for a new generation of numerical algorithms that consider core processors, data movement, energy consumption and more to meet the demands of applications. Similarly, as we begin to see computers attaining an exascale rate of computation, we will need software that extracts performance from these massively parallel machines: algorithms, software and hardware all play a pivotal role in this.

It is widely recognized that, historically, numerical algorithms and libraries have contributed as much to increases in computational simulation capability as have improvements in hardware.

Applied Mathematics Research for Exascale Computing, March Issue

Taking on the challenges

Today, supercomputers continue to play a pivotal role in advancing scientific research and technological innovation, powering simulations and calculations in fields ranging from quantum mechanics to climate research. But supercomputing is facing challenges on multiple fronts: one crucial consideration is the exponential growth in data volume and complexity across various fields, driven by advancements in data collection technologies such as sensors, satellites, and scientific instruments. As datasets continue to expand, the demand for computational power to process and analyse this data also increases.

Additionally, the complexity of computational tasks, particularly in fields like artificial intelligence, climate modelling, and molecular simulations, presents challenges for supercomputing architectures. These tasks often require massive parallel processing capabilities and specialised hardware accelerators for optimal performance.

The growing amount of energy consumed by training large language models, are

estimated to have grown by a factor of 300,000 in six years, with AI model size doubling every 3.4 months.

And then, there are the very important environmental considerations. Power consumption and cooling requirements don’t just post practical limitations on the scalability of supercomputing infrastructure, they also present environmental ones. Supercomputers consume substantial amounts of power, ranging from 1 to 10 megawatts on average, equivalent to the electricity consumption of thousands of homes. The financial implications, with 1 megawatt equating to approximately $1 million in electricity costs, underscore the economic challenges of maintaining on-premises supercomputing infrastructure. This is a tremendous amount of energy for a single industry to be consuming. As power demands escalate, which they will both in terms of computational processing and cooling systems, the economic and environmental sustainability of supercomputing facilities will become untenable.

These challenges have driven the quest for ever-greater computational power, with advancements like Nvidia’s H100 and Google’s TPUs. But is it enough? Technologies like quantum computing and neuromorphic computing hold potential for processing of complex datasets and computational tasks that are currently beyond the reach of classical computing architectures with much less power requirements.

Alternative computing paradigms

By harnessing the principles of quantum physics, quantum computers could help address energy consumption concerns and look to solve problems that have plagued society for years – accomplishing complex calculations 47 years quicker or 158 million times faster than any supercomputer. The heart of this transformative potential lies in two areas. We must (1) continue the pursuit of a quantum computer capable of executing millions of error-free quantum operations and (2) provide the infrastructure, in which the technology steps out of a lab so that it can evolve and grow to support near term quantum-classical hybrid needs.

The successful quantum computer must navigate all the challenges noted above as well as balance the need for innovation with practical constraints. This may involve internal development efforts or leveraging existing technologies in close alignment with industry standards. This is why it’s always been essential for OQC that we integrate these computers into a more heterogeneous computing environment and enable users to program hybrid quantum-classical workflows.

A significant takeaway from our experience of installing quantum computers into data centres is that the current requirements for QC-HPC integration are readily achievable with present-day technologies. The convergence of colocation data-centres and quantum computers opens up exciting possibilities, and ushers in a new era of hybrid computational power. OQC realised four critical factors early into our journey of bringing quantum into the hands of humanity:

- Quantum computers must fit within existing HPC infrastructure, considering both physical dimensions, the overall resource scaling, and specialised cooling requirements.

- A robust software stack with well-documented APIs and SDKs is essential for seamless integration into existing applications.

- Real-time monitoring tools are vital for tracking quantum system health and performance. Key performance indicators (KPIs) include queue time, system uptime.

- That scale needs to be manageable, something that is offered by our core IP.

- In classical computer science, developers have access to a number of high-level languages, each offering various levels of abstraction and simplification. Quantum computing, however, is still at the gate level, devoid of high-level abstractions. QASM and QIR are community-driven projects aiming to establish a quantum program definition standard across the industry. Our toolchain is capable of handling programs defined in either of these lower-level formats, and high-level package tools that aid the generating of these exist.

The tipping point: Quantum Advantage

As with most historical technological progression, there will come a tipping point when quantum supersedes classical. Exactly when this blending line occurs is unclear but the path to achieving it is something that is familiarly termed ‘Quantum Advantage’. It is anticipated that this requires very low error operation. Commercially and societally relevant quantum applications are predicted to require millions or even trillions of such operations, which is driving companies like OQC and Riverlane to focus on an exciting computational advantage: the ‘MegaQuop’ regime.

This regime marks a pivotal threshold where quantum processors can execute approximately one million quantum operations before succumbing to errors like decoherence. In most quantum computing setups, the error rates of operations typically hover between 0.0002 to 0.002, translating to roughly 100 quantum operations. However, to breach the MegaQuop frontier, where one million operations can be reliably executed, error rates must plummet to below 0.000001.

OQC, like many other quantum companies, is exploring strategies like error mitigation, and this race towards MegaQuop entails a concerted effort to enhance hardware capabilities, innovate error-reduction techniques, and revolutionise quantum error correction. These efforts aim to propel our quantum computation beyond hardware limitations towards fault-tolerant operation. Our unique points of difference are our scalable qubit technology, our comprehensively designed enterprise-ready platform, and hardware-efficient error reduction methods.

Looking back to move forward

In the short term, the quantum computer isn’t going to replace the CPU and it’s not going to replace the GPU. The QPU will be used to accelerate certain types of subroutines that can help applications. By integrating quantum and classical computing resources, we will end up with a hybrid approach that uses the strengths of both paradigms.

Join our newsletter for more articles like this

By clicking ‘sign up’ you’re confirming that you agree with our Terms & Conditions